Meta introduced protective features for teenage accounts on Instagram on Wednesday. Menlo Park -based social media Vishal said he was increasing his teen account protection and safety features to offer more tools to teenages while exchanging messages with other users with the platform’s direct messaging (DM) feature. In addition, young people will be able to see that when another user joined the platform, a series of safety points that they should be remembered by talking to strangers should be remembered.

In a newsroom post, Meta said that new features are part of the company’s ongoing efforts to protect young people from direct and indirect harm and create proper age experience for them. Social media also highlighted that protective features such as security notes, location notes, and Iranian protection have helped millions of adolescents avoid harmful experiences on the platform.

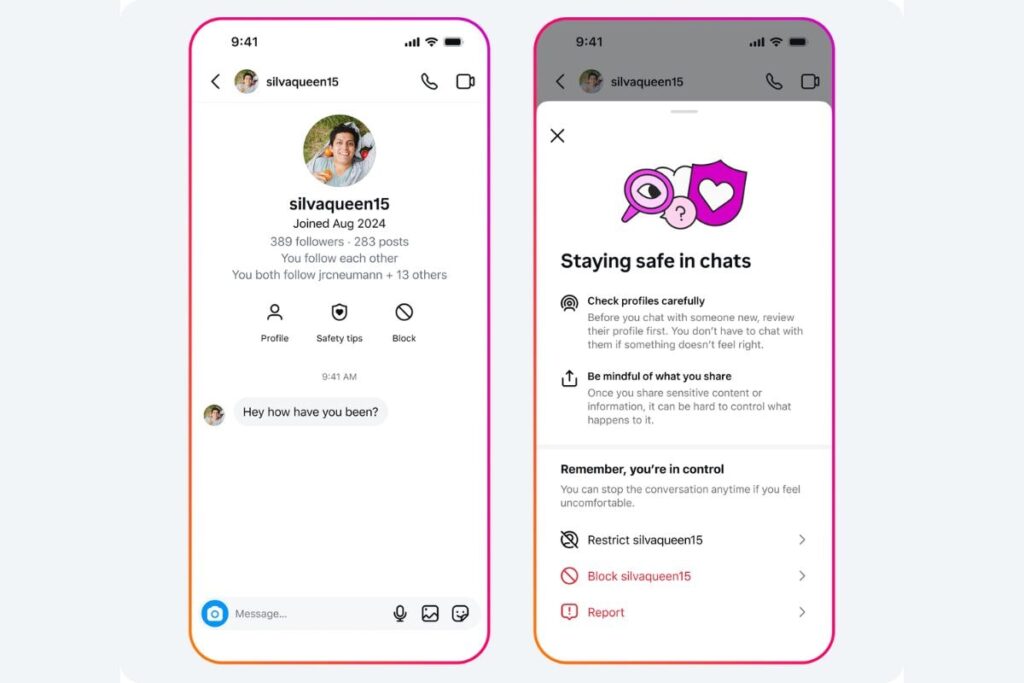

Both of these safety features are available in Instagram DM. The first one will show the safety points when they are about to send the text to another user, even if they both follow each other. These points ask teenager to carefully check the other person’s profile, and that if nothing feels well, they do not need to communicate with them. “It also reminds users to share in mind about sharing with others.

When you first send a DM to another user, the teen will see the month and the year that the account has joined Instagram in the upper part of the chat interface. Meta says it will help users with more contexts about messaging accounts and allow them to easily find potential scammers.

The second feature appears when one teen tries to prevent another user from DMS. The sheet below will now show the “block and report” option, which will allow both the account to stop and report the Meta. The company says the joint button will allow teenagers to inform the company instead of eliminating the dialogue and isolating the company.

Instagram’s new joint “block and report” button

Photo Credit: Meta

Meta is also expanding the accounts run by adults in the scope of safety features for teenage accounts that mainly highlight children. These are the accounts where a profile picture is from a baby, and adults regularly share photos and videos of their children. Generally, these accounts are managed by parents or children’s talent managers who run this account on behalf of children under the age of 13.

In particular, while meta allows adults to run a child representing an account until they mention that in their bio, the child’s accounts are deleted.

The company said these accounts, administered by adults, will now see some reservations available for teenage accounts. These include keeping Instagram’s toughest message settings to prevent unwanted messages and changing hidden words to filter aggressive comments. Instagram will start showing these accounts a notification in the upper part of their feed to tell them that their safety settings have been updated.