Meta is adding some of her teen safety features to Instagram accounts that feature children, even if they run by adults. Although children under the age of 13 are not allowed to sign up on the social media app, adults like Meta Parents and Managers allow for children to run and post their videos and photos. The company says these accounts are “used by the overwhelming majority of benign ways”, but they are also targeted by hunters who leave sexual comments and seek sex images in DM.

In the coming months, the company is setting up its toughest message to these adults’ children’s accounts to prevent invasive DMS. It will automatically turn on hidden words for them so that account owners can filter unwanted comments on their posts. In addition, Meta will refrain from recommending blocked accounts by users to reduce the chances of hunters to be found. The company will make suspicious users difficult to find by search and will potentially hide comments from suspicious adults on their posts. Meta says its rules will continue to “take aggressive action” on the accounts that break it: it has already removed 135,000 Instagram accounts to leave sexual comments from adults managed by adults with children who are characterized by children earlier this year. It also deleted an additional, 500,000 Facebook and Instagram accounts associated with these original.

Meta introduced teenage accounts on Instagram last year to automatically select consumers in strict privacy features between the ages of 13 to 18. The company then launched teen accounts on Facebook and Messenger in April, and even to determine the AI -age -old tech testing whether an adult user lied about his birthday so that he could be transferred to a teenage account when needed.

Since then, Meta has reduced the maximum protective properties, which means for young people. He issued a notice of location in June so that young youths could find out that they were chatting with someone from another country, as the sex scammers usually lie about their location. –

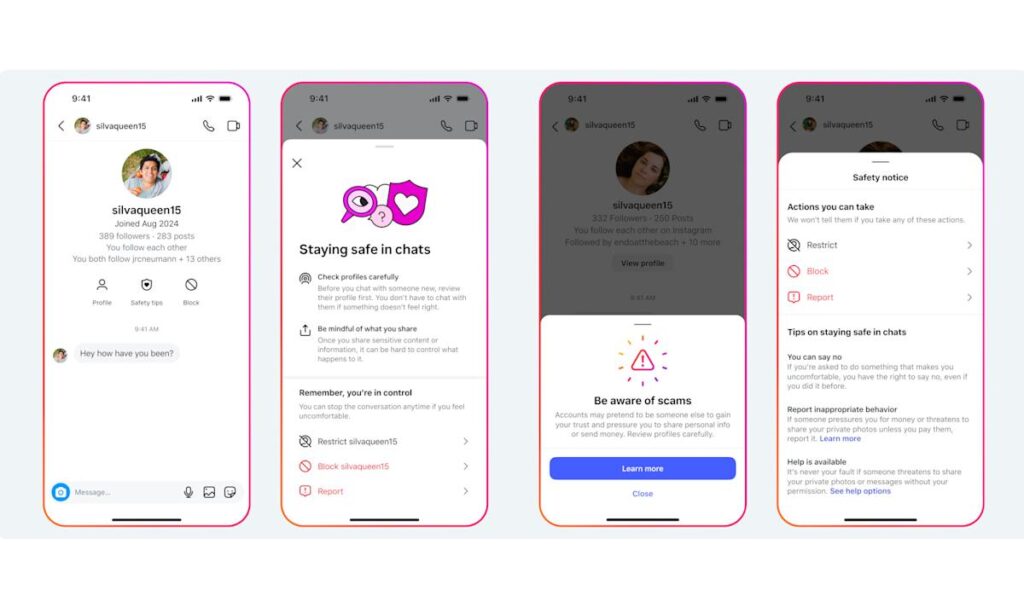

Today, meta is also starting new ways for young people to look at safety points. When they chat with someone in the DMS, they can now tap the “Safety Tips” icon in the upper part of the conversation to bring a screen where they can restrict, block or report the other user. Meta has also launched a joint block and report option in DMS, so that the user can take both operations together in a tap.